The Perils of Generative AI. Part 1 - An Introduction to Generative AI

This essay is an introduction to Generative AI. It marks the beginning of a series exploring the potential detrimental effects Generative AI models can exert on business and financial health.

What is Generative AI?

Generative AI refers to a category of artificial intelligence techniques that involve generating content based on patterns and examples learned from training data. The content could be text, images, audio, or videos.

For example, ChatGPT uses GPT-3.5 which is a language model that doesn't have explicit knowledge of specific facts or events beyond what it has learned from the training data. Its responses are simply based on patterns and statistical associations in the text it was trained on.

How do Generative AI models work?

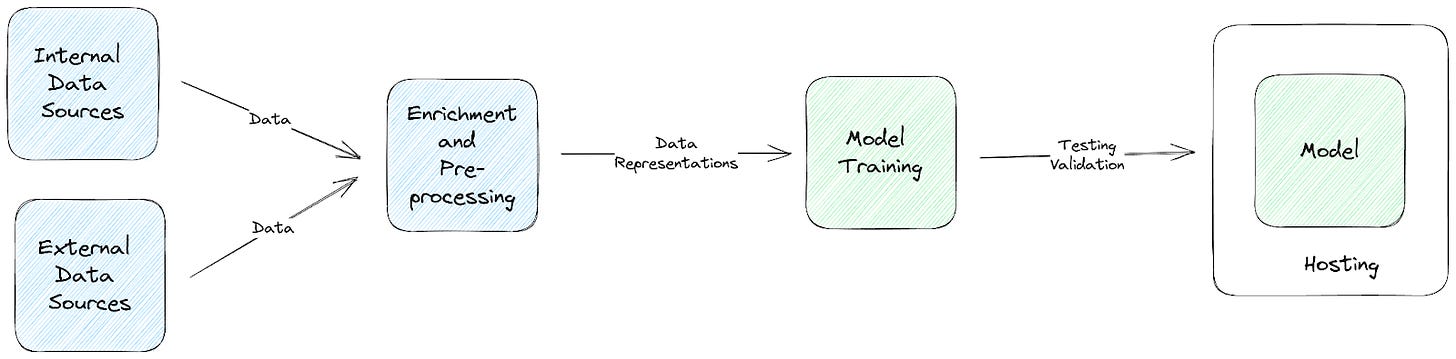

Generative AI models learn from extensive datasets, extracting patterns and information to generate content. This process involves several steps for effective and accurate usage. I am going to present this in a very simplistic way.

The Training phase is about teaching the models about your data. On the other side, the Inference phase is about how models generate output when queried and interacted with. Let's take a deeper look at both.

Training and Inference mechanisms expose companies to substantial damaging risks that should be prevented and mitigated. We cover these risks in the second article in this series.

Training

Training is about teaching the model to learn from the data provided and improve its performance on specific tasks. There are three main Training mechanisms used for Generative AI, namely, Pre-training, Fine-tuning, and Prompt Engineering.

1. Initial Training or Pre-training

The model is initially trained on a massive dataset containing a vast amount of data. Depending on the model, the dataset may include books, articles, websites, and even your enterprise data from various internal sources.

During pre-training, the model learns the statistical patterns, grammar, and semantics of the language by predicting the next word in a sentence given the context of previous words. This process helps the model capture the knowledge and language understanding from the training data.

The Pre-training step is conducted by the organizations who create the base models - it could be your company OR a vendor such as Open AI or Anthropic who offer you their AI model.

There are a few key parts in this step that are worth calling out:

Data Gathering: Typically, Generative AI models are trained on a large data set. It is very common to use multiple data sources, and often a mix of internal and external ones.

Data Preprocessing: Gathered data from various sources must be cleaned, made error-free, and appropriately structured so that it can be used in training the model. It is important to note here that the representation and content of the data may have changed compared to its sources.

Pre-training: The model is then trained on the processed data. The model iteratively develops a strong foundation of knowledge, enabling it to generate coherent and contextually relevant output. The end result of a Pre-training process is called a Foundational Model (or Base Model). GPT-4, PaLM, and Dolly are all examples. At this point, serious security risks manifest in the models. The models now have their own representations of sensitive data including PII, financial, trade secrets data, and more. Although, these representations are not human-readable but may be output by the model at the time of inference.

2. Fine-tuning

Fine-tuning in the dictionary is defined as:

make small adjustments to (something) in order to achieve the best or a desired performance.

Generative AI models can also be fine-tuned to achieve the best performance and higher accuracy for industry-, domain-, or company-specific use cases.

During fine-tuning, a model is trained on an additional dataset that is specific to the desired task or domain. The dataset consists of examples related to the task, such as conversations or specific types of text. The training process involves adjusting the model's parameters using the new task-specific data while retaining the knowledge acquired during pre-training.

It is conducted in a similar fashion as Pre-training explained above.

Fine-tuning is typically performed by a company either

using foundational models from vendors like Open AI

or training in-house open-source models for their specific use cases or tasks.

3. Prompt Engineering

Prompt engineering is a bit different from Pre-training and Fine-tuning and involves crafting or designing the input prompt provided to a generative AI model to elicit desired responses or behaviors. It is about specifically designing prompts in such a way that we receive better results from the AI models.

Inference

This is where the Generative AI model actually provides value to users. Remember your ChatGPT screen where you ask questions? Simplistically, that question gets chopped into smaller units called tokens and encoded into a numerical representation that the model can understand. It then draws out a contextual understanding of this representation and uses its training time learnings to generate a response.

Do you think humans can use this response? Nope. (Unless you are Will Smith from I, Robot) The model just talks in numerical representations. The response is then decoded, further post-processed, and passed on back to the user.

Inference is a technical term in Machine Learning. We can think of the usage of these models in terms of Interactions. People may directly interact with Generative AI or may use applications that in turn use Generative AI through APIs.

People

Employees at your company might love using ChatGPT already. It provides an amazing productivity boost and quickens up mundane tasks such as learning and writing.

Applications

Many companies have already adopted ChatGPT and other similar 3rd party models to help their users benefit from Generative AI. Examples include Duolingo and Udacity.

What goes around comes around

The dangers of adopting Generative AIs irresponsibly begin to hurt when the knowledge representations of sensitive data start leaking out to unauthorized users. This potentially has a catastrophic financial impact in terms of litigations, brand value, and even regulatory fines. A recent example was the Samsung trade secrets case. Employees unintentionally leaked trade secrets to ChatGPT in order to benefit from productivity gains. This resulted in Samsung applying a company-wide ban on generative AI tools.

To read more about the serious risks surrounding Generative AI, please read my next essay in the series: An Overview of Security Risks.

About Us

We are building Aimon AI, a complete AI-native Security Platform. We have over 25 years of combined experience in leading ML/AI, Security, Monitoring, Logging, and Analytics products at companies such as Netflix, AppDynamics, Thumbtack, and Salesforce. We are proud to be advised by renowned Privacy, Security, and AI experts. Please reach out to us at info@aimon.ai.